Designing Pivotal Practice an AI Roleplay Experience for Manager Growth

Managers rarely get to practice the hardest parts of leadership—giving feedback, managing conflict, navigating defensiveness. Pivotal Practice makes that possible through AI-powered roleplays that turn theory into action. As the sole designer, I reimagined how managers practice and measure real-world skills—launching with enterprise clients like Amazon, Salesforce, and Uber, driving 23–63% skill growth, 86% confidence gains, and $1.5M in new and renewal revenue.

About Praxis Labs

Praxis Labs is an AI-powered learning platform on a mission to make workplaces work better for everyone. Through coaching, roleplay, and skill assessment, we help people develop the critical human skills needed to succeed as leaders. Organizations use our platform to build these skills at scale and drive higher engagement and performance.

About Pivotal Practice

Pivotal Practice is an AI-powered roleplay experience that helps managers practice research-backed leadership skills proven to impact core business outcomes in the modern, global, diverse workplace. It provides people a safe space to practice the conversations that matter most — giving feedback, coaching performance, and navigating conflict. It provides an immersive, scalable, and measurable way to build inclusive leadership skills.

roleAs the sole designer for Pivotal Practice, I led the end-to-end design of the experience, shaping the conversation experience, design system, learning flow, and AI interaction.

Team1 Product Owner

2 Learning Designers

2-3 Software Engineers

1 QA Engineer

ResponsibilitiesUser Research

Usability Testing

Product Design

Visual Design

Design Systems

design processDiscover

Building on 1.0 research and feedback, we ran targeted discovery with client design partners and internal audits to refine the product vision

Define

Aligned on core problem framing and success metrics; prioritized design goals for voice, coaching, and learner experience consistency

Develop

Rapid prototyping and weekly design sprints with continuous stakeholder feedback to improve the core learner experience

Deliver

Beta launched with design partner clients (Jan 2025), followed by general availability (April 2025) with a new rebranded AI-native platform + 14 scenarios

01

Discover

The Problem

Leadership training often fails where it matters most—real-world application.

Too much theory, not enough practice

Hard to scale across learners and clients

No easy way to measure if people were improving

We needed an immersive, scalable, and measurable solution.

02

Define

The Challenge

We built an immersive, scalable, and measurable solution with Pivotal Practice 1.0. The product resonated but had limits:

Clients wanted more variety and org-specific customization

Learners expected more dynamic, responsive interactions

Content creation was slow, relying on manual scripting and voice acting

Our Approach

For Practice 2.0, we reimagined the product using GenAI to:

Speed up content creation and enable org-specific customization

Make conversations more dynamic, human, and responsive

Test quickly and build a scalable, immersive learning experience that’s measurable and ROI-driven

03

Develop

Evolving the ExperiencePhase 1: Concept Validation

Tested early text and voice prototypes with learners and clients to validate memory, summaries, and just-in-time coaching flow.

Phase 2: Product Refinement

Fast 1–2 week design–build–test cycles. Usability tests with success targets:

80%+ ease of use

70%+ coaching helpfulness

70%+ memory recall

70%+ would continue using

40%+ very disappointed if removed

Phase 3: Scenario Expansion

Tested the experience across leadership challenges, expanding from feedback on communication to performance coaching and leading team change.

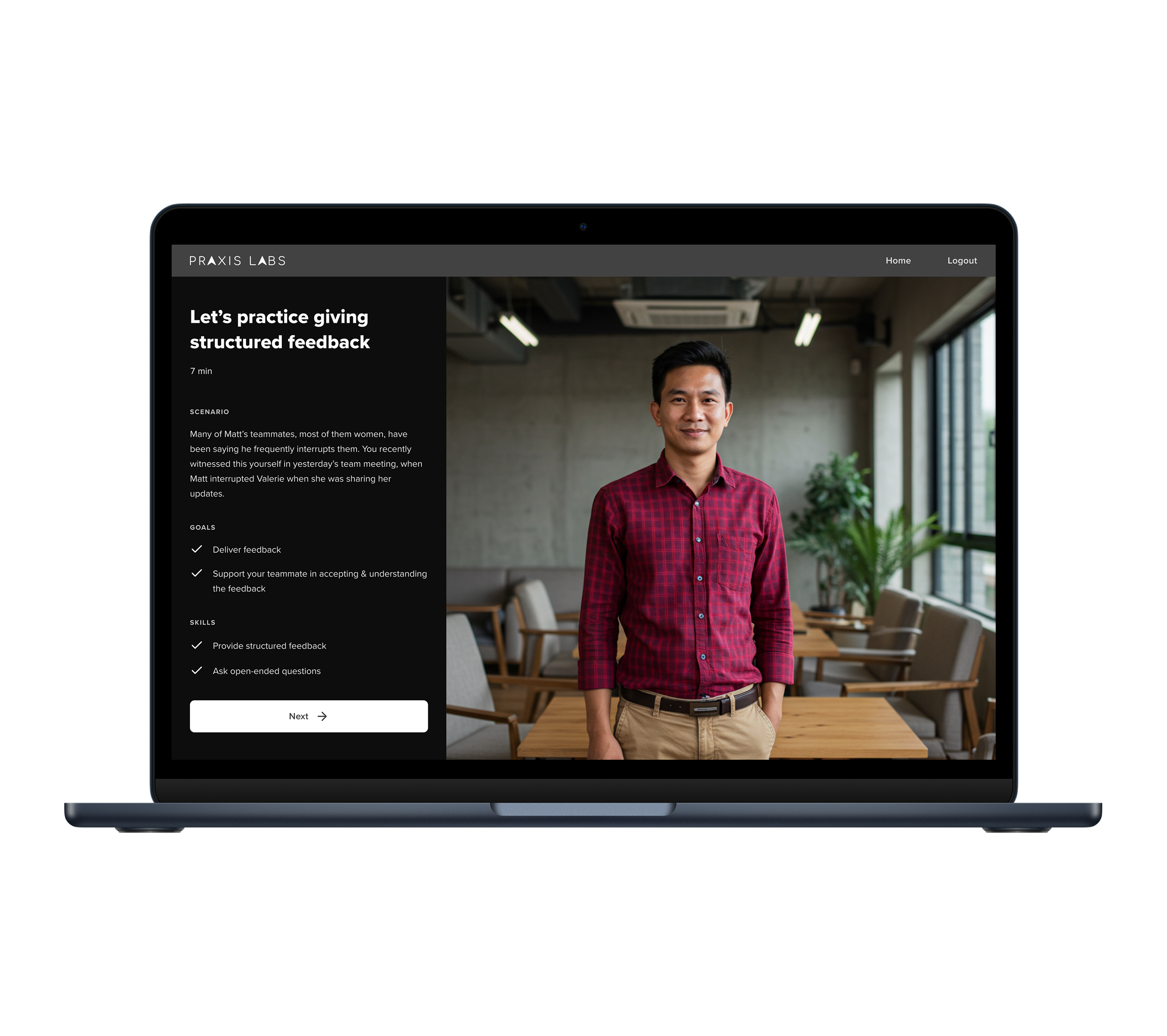

Intro Page

Early testing showed our first intro page didn’t give learners enough context. They felt unclear about the conversation goal and how to know when they had finished.

I redesigned it to better prepare them without overwhelming.

Outlined the scenario and coaching goal

Explained which skills they would practice and why

Used clear, supportive language and reduced distractions

Skills Pages

We added a Skills Preview step so learners could see 2–3 key behaviors they’d practice. Early designs let users skip this, but many who skipped felt confused.

I changed the flow to make reviewing skill pages required.

Provided skill purpose, plain-language description, and example

Allowed experienced learners to quickly tap through if they wanted

Prevented frustration by giving everyone a consistent preview

Managers liked the quick refresher before starting conversations

Voice vs. Text Interaction

We started with text-based roleplays to test and validate the core experience. Once stable, we explored adding voice to make conversations feel more natural and immersive.

At one point, we supported both options, but learner feedback and our instincts aligned:

Text felt flat and didn’t differentiate us

Voice was more realistic and better for building communication skills

Voice helped learners prepare for real workplace conversations

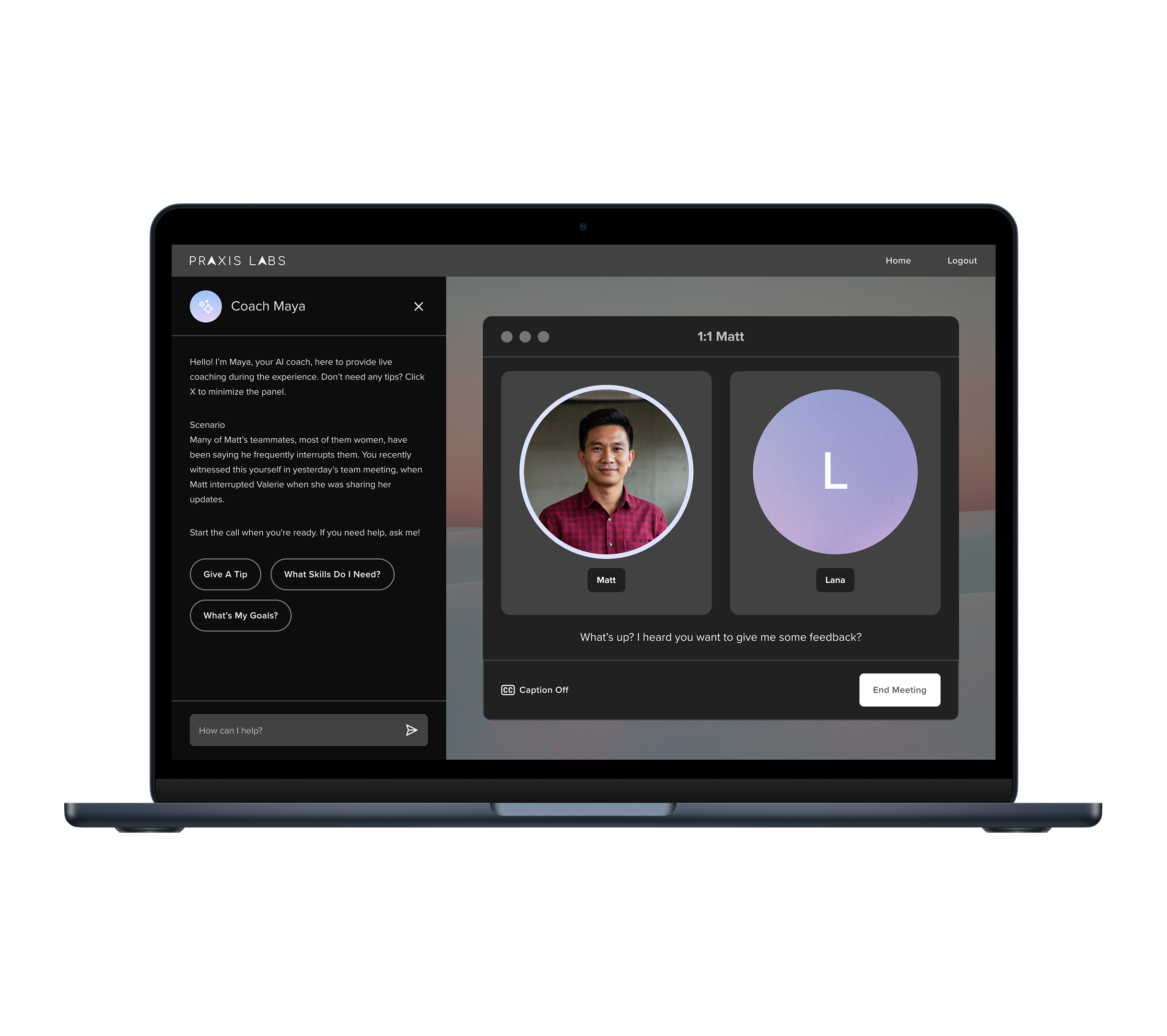

Meeting Simulation

Early user testing revealed that many learners didn’t realize they should speak aloud or if their audio was being picked up.

We redesigned it to feel more natural and intuitive—like a real video meeting.

Simplified the layout to remove unnecessary UI elements

Had the AI character speak first to signal it was a live conversation

Added voice activity indicators and mic input feedback for clarity

Included live captions to support accessibility and reinforce what was said

Images vs. Animation

We explored bringing back animated characters like we had used in earlier products. In testing, learners responded more positively to realistic still images. We chose to move forward with AI-generated still photos for characters.

Animation had technical issues: jittery lip sync, missing features, inconsistent rendering

Learners said still images felt more believable and less distracting

Voice acting created enough emotional presence without needing animation

Photo-based characters allowed faster scenario creation and scaled better across new scenarios

Real-Time Coaching

Early on, learners sometimes struggled to move conversations forward or understand their goal. We introduced Maya’s real-time coaching to provide support in the moment. This became a differentiator from other tools.

Repeated the scenario and coaching goal in the side panel for reference

Delivered one automatic tip around 34 seconds to avoid disruption

Allowed learners to request additional tips on demand

Redesigned coaching as a side panel to reduce visual clutter and distractions

Multiple testing rounds helped us fine-tune the tone, timing, and overall experience.

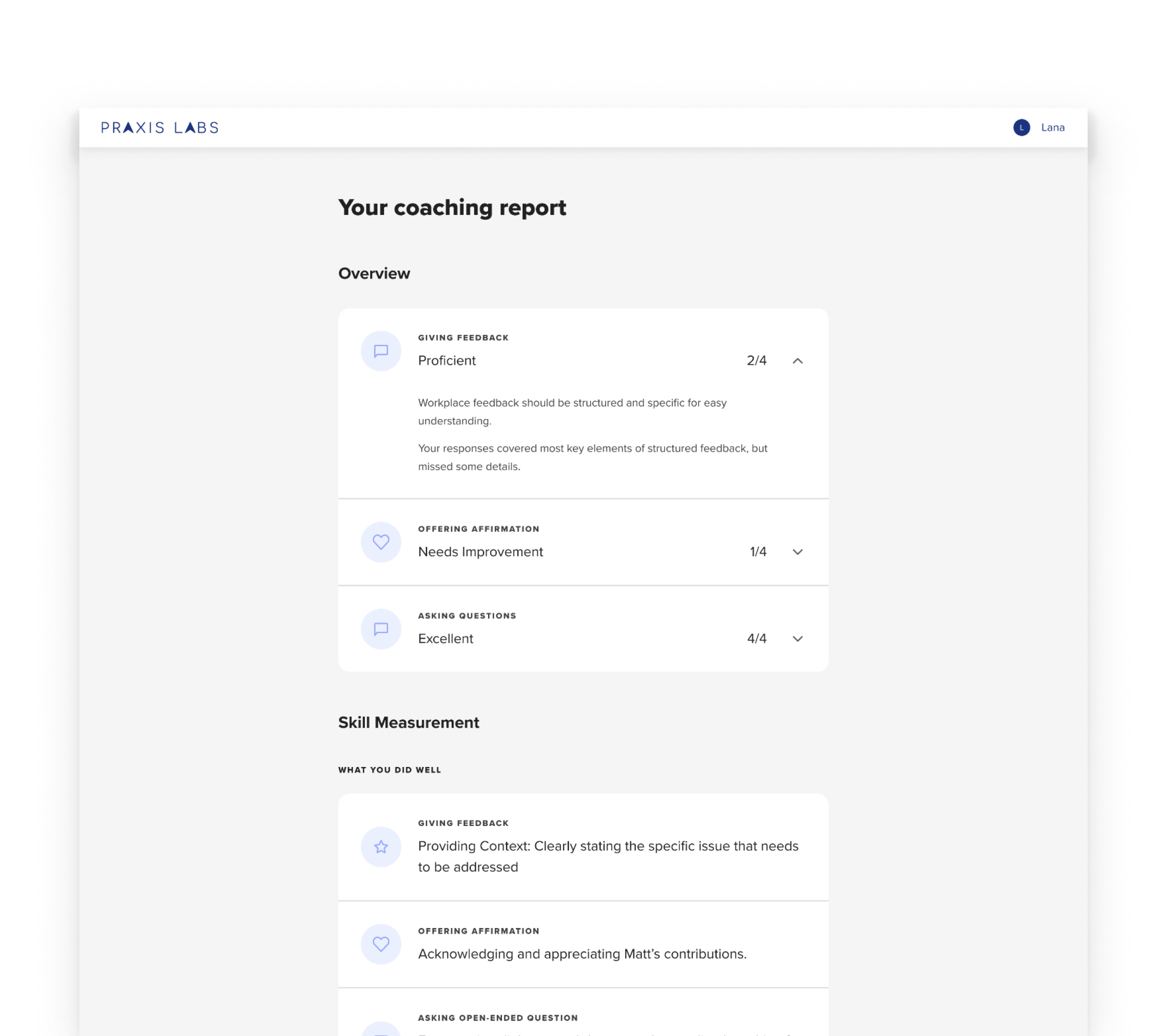

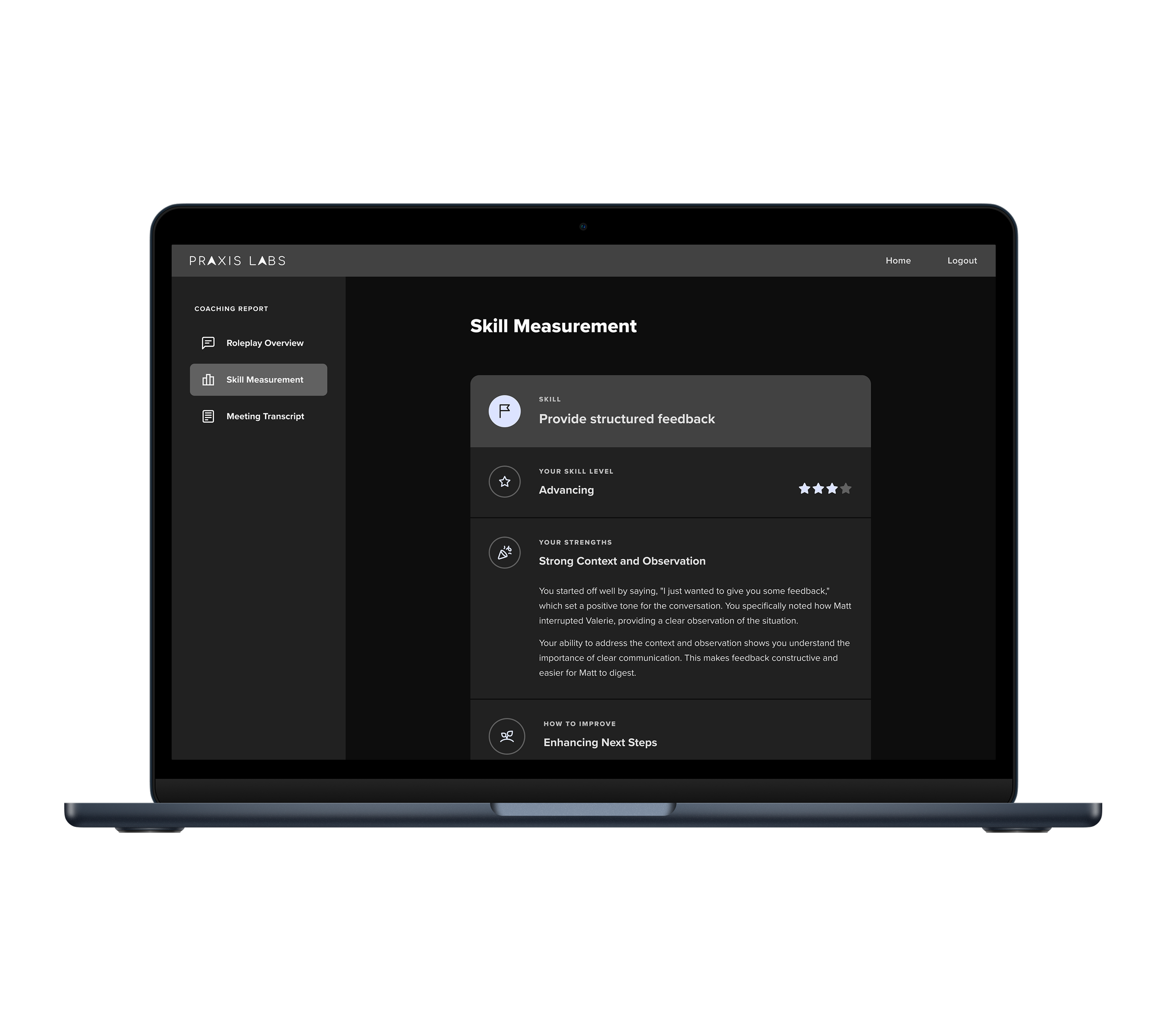

Coaching Report

In early demos, clients weren’t sure how we calculated skill scores or what we measured. The original overview was too vague and the feedback wasn’t as actionable. I redesigned the report to:

Show how well the learner achieved the overall goal

Group skill measurement by specific skills

Add a transcript so learners could review where to improve

Replace unclear numeric scores with intuitive star ratings

Learners found it valuable to reflect on their responses, and the feedback felt more trustworthy in context. We also saw increased engagement with the report.

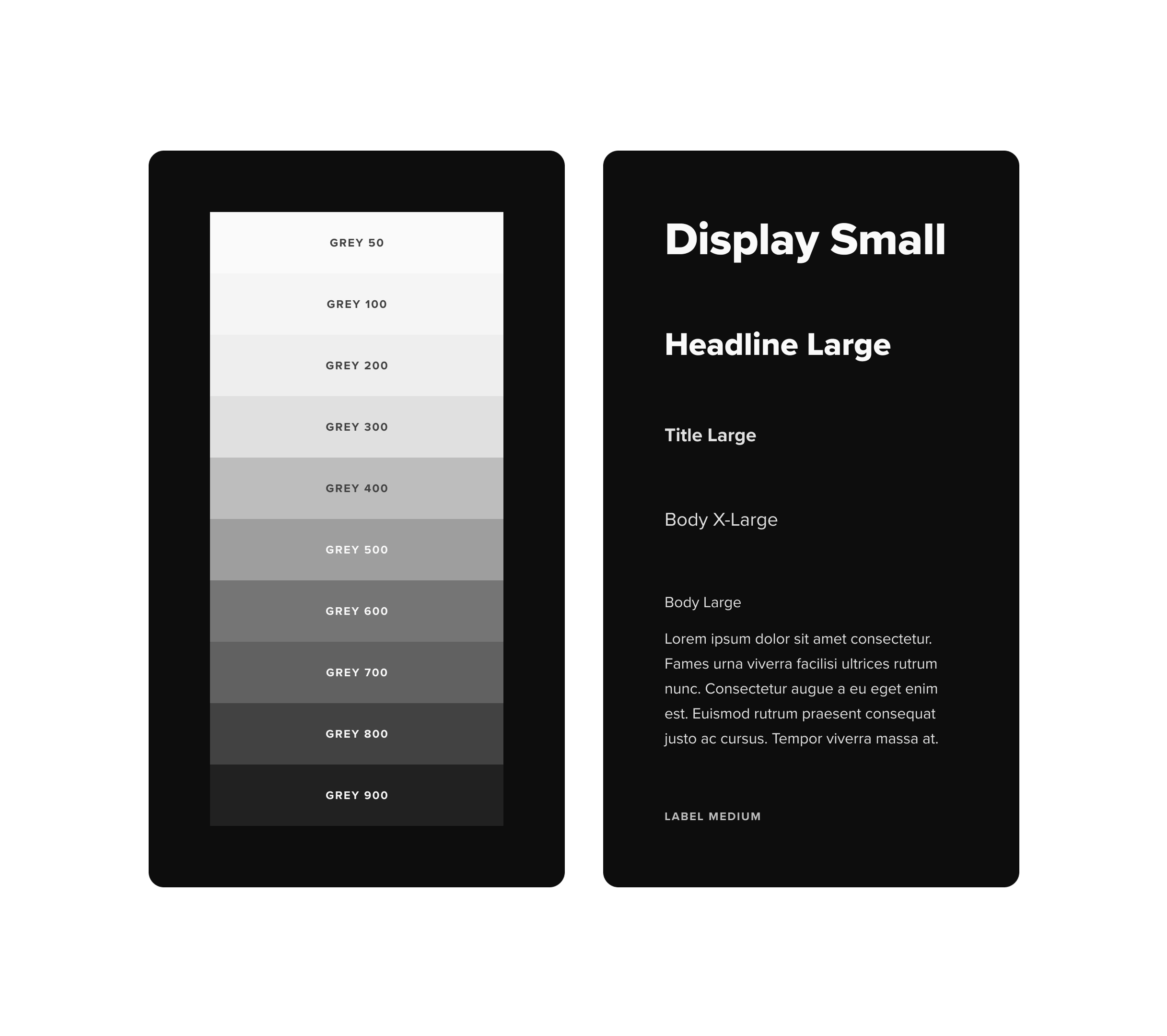

Visual Design

We needed a system that supported learning without distracting from it. I designed a visual design system that reduced cognitive load, aligned with our product brand, and applied accessibility best practices.

Minimized visual noise and simplified layouts to lower cognitive load

Applied consistent patterns for hierarchy, typography, spacing, and reusable components

Used a dark theme with off-white text to reduce eye strain and keep focus on the conversation

Ensured accessibility with left-aligned text, 16px minimum font size, high contrast, and generous spacing

Character Design

To make practice feel more immersive, I translated character backstories and scenarios into visuals and voice design that reinforced the narrative.

Established a visual prompt library to generate diverse, high-quality character images with subtle cues that reflected each scenario

Created a voice design prompt library so tone and language matched character roles, backstories, and context

Iterated continually to maintain quality and consistency as AI models evolved (ImageFX, Hume EVI3, Claude Sonnet)

These choices helped learners immediately understand the dynamics of each scenario and made conversations feel more authentic and engaging.

04

Deliver

Learner Journey Walkthrough

After multiple rounds of iteration and testing, we created a seamless experience designed for clarity, presence, and psychological safety.

The learner journey flows through five core stages:

Intro Page

Skill Pages

Meeting Simulation

In-the-Moment Coaching

Coaching Report

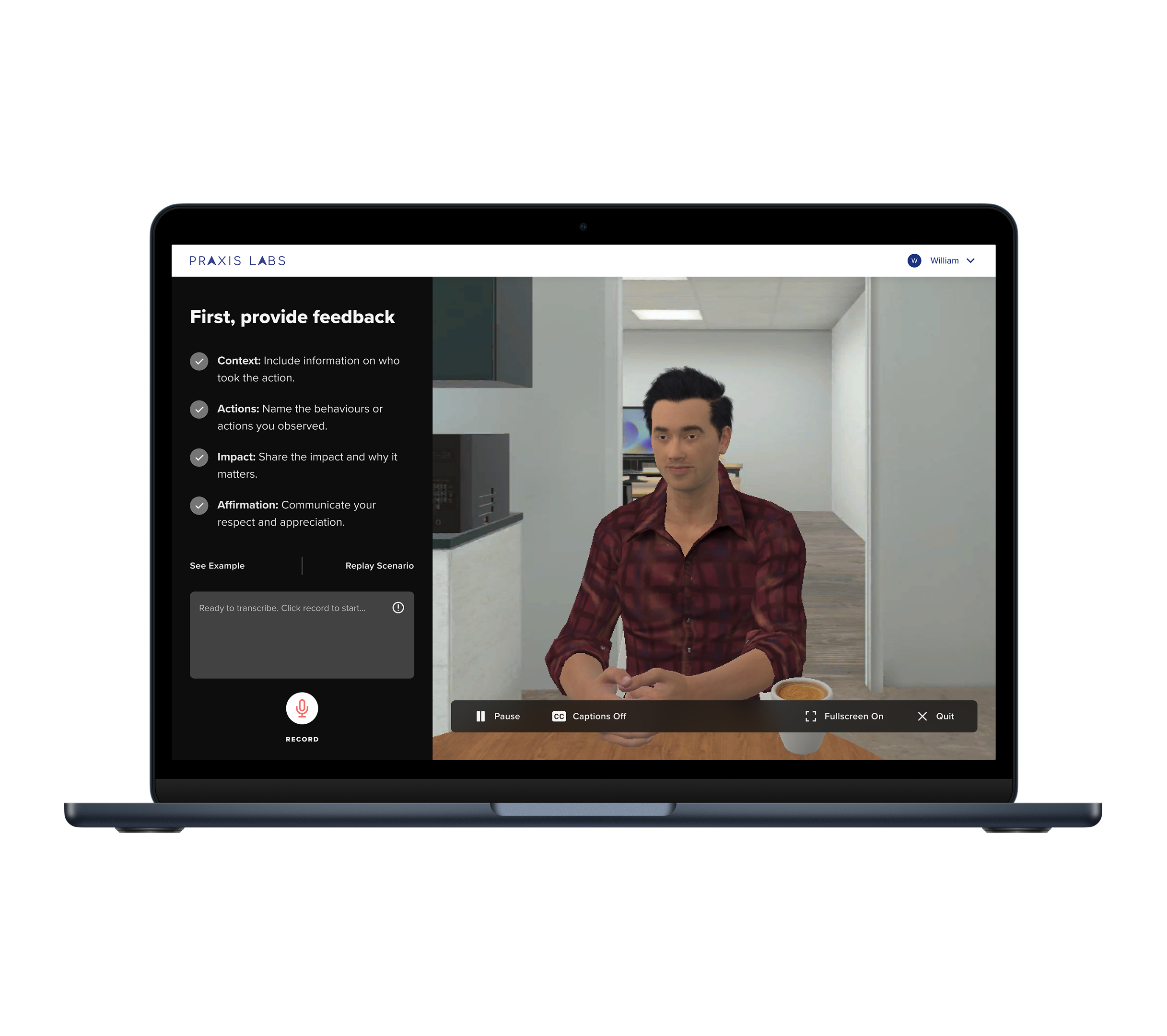

Intro Page

Learners land on a short, welcoming screen designed to reduce anxiety.

Conversational tone

Clean, dark-mode layout

Character photo provides emotional context

Minimal cognitive load to set expectations gently

The goal: foster presence and safety before learners begin.

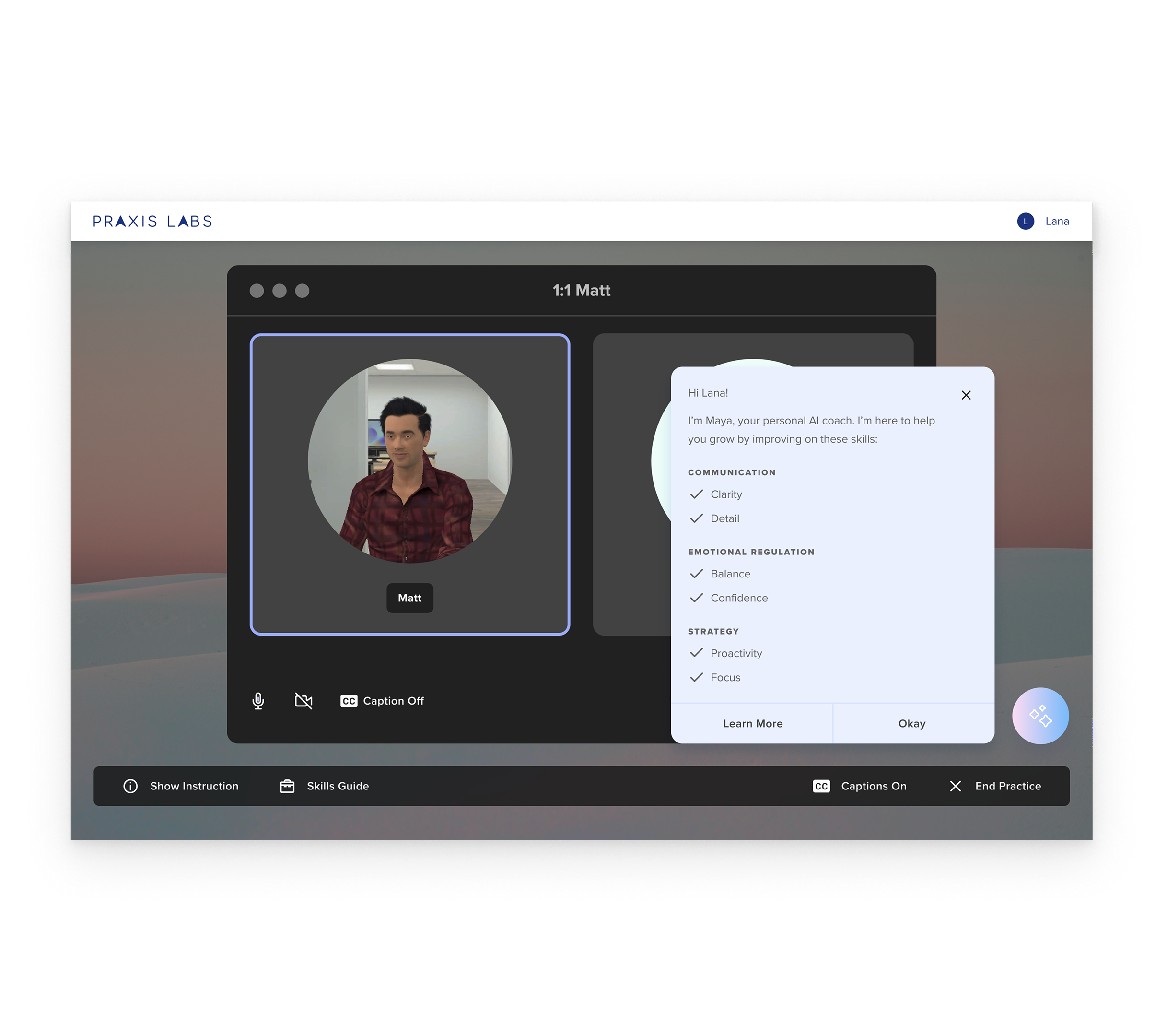

Skill Page

Learners preview 2–3 inclusive leadership skills they will practice.

Each skill includes:

Purpose

Plain-language description

Quick example

I designed this page to be scannable, structured, and low-pressure. Consistent spacing and clear hierarchy help orient learners without overwhelming them.

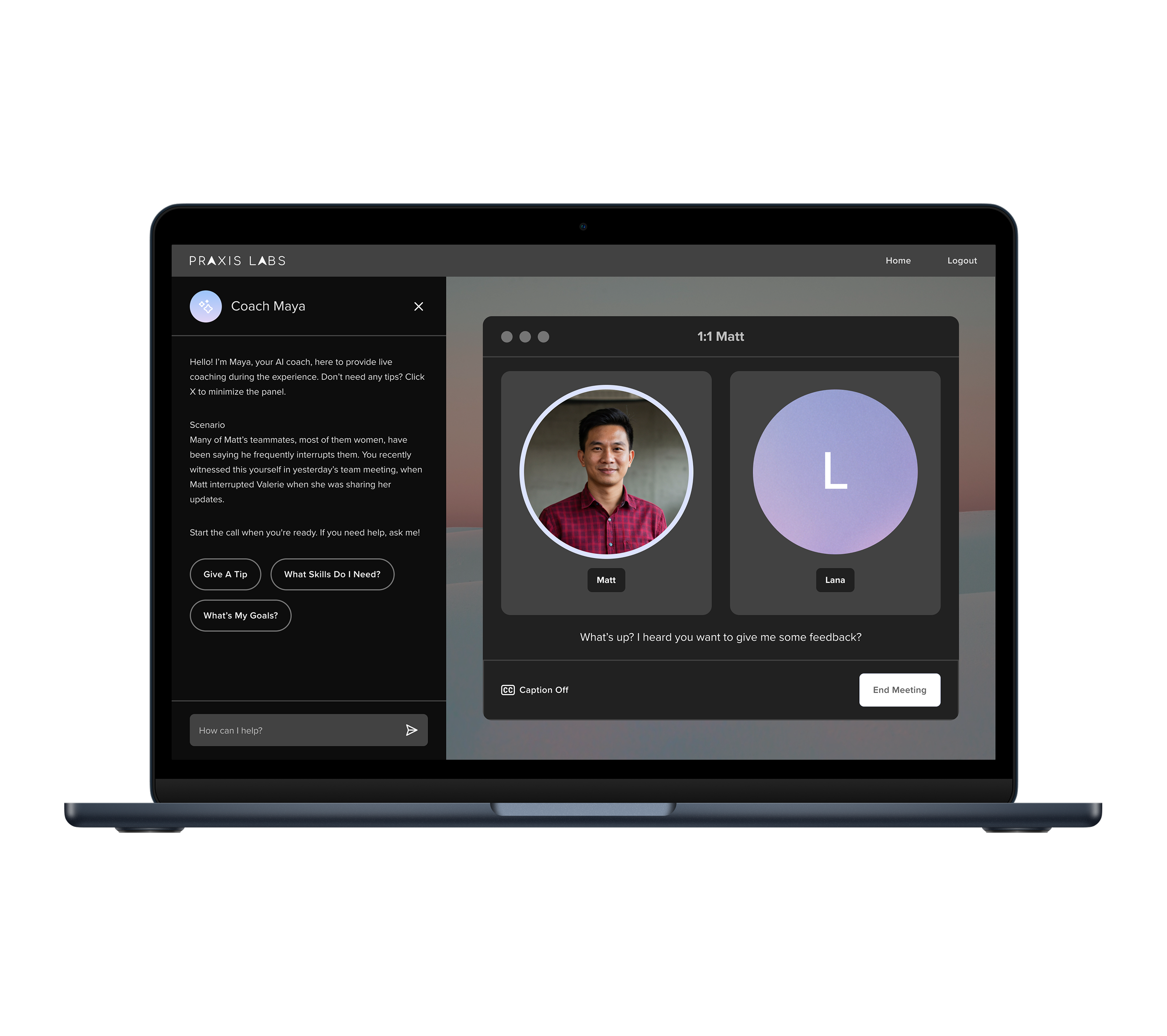

Meeting Simulation

I redesigned this screen to feel like a familiar video call, removing clutter from Practice 1.0.

Learners speak aloud to an AI character in real time

Character speaks first to signal a live conversation (early testing insight)

Voice indicators, mic input feedback, and live captions increase trust and accessibility

This simplified, conversational interface helped learners feel confident, present, and focused during practice.

Real-Time Coaching

We added Maya’s real-time coaching to guide learners during conversations—not just afterward.

Scenario + goal shown in side panel for reference

One automatic tip triggers at ~34 seconds to offer gentle support

Learners can request additional tips on demand

Coaching panel is collapsible to reduce distraction

This balance gave learners confidence to move forward when stuck, while respecting their flow if they preferred independent practice.

Coaching Report

After the session, learners get skill-based feedback.

Replaced numeric scores with 5-star ratings to reduce anxiety

Feedback organized by skill + “what went well” + “what to improve”

Transcript of the conversation added for reflection + trust

Learners can revisit key moments for deeper learning and behavior change

05

Impact

Business Outcome

Reignited Growth

Re-engaged accounts at risk of churn and unlocked stalled deals

$1.5M in New Revenue

Generated $1.5M in new and renewal deals within 6 months of beta

Enterprise Adoption

Adopted by enterprise clients including Amazon, Salesforce, Uber, Accenture, ADP, Conagra

Faster Creation

Reduced scenario creation time from 2 months to 2 weeks

Learner Outcome

88

Average satisfaction (outperformed the 64 eLearning benchmark)

89%

Believed wider adoption would positively impact team performance

86%

reported higher confidence applying skills on the job

63%

Improvement across restating, giving feedback, and validating emotions

90%

Said the experience improved their performance on the job

88%

Said they would use it to prepare for future conversations

Learner & Client Quotes

This is the best on-demand learning experience for managers I’ve ever used. The opportunity to practice challenging conversations and have real-time discussions that could go anywhere is really something special.

People ManagerOne of our biggest challenges is scaling our Learning team’s reach and impact. Many of our people leaders struggle with difficult conversations, but we can only facilitate workshops for up to ~200 leaders per year. I think this is a powerful tool to help us scale.

Fortune 500 CompanyThis has an inclusion lens built-in. When you have a performance conversation, it’s usually something else impacting it, and with the dynamic simulation, it requires people to move more quickly, incorporate compassionate listening, and be responsive, just like in real-life.

Fortune 500 CompanyAs a newer manager, I’ve already had to have some tough conversations with direct reports, and it’s been tough to know if I’m “doing it right.” This experience practicing having hard conversations with AI was really helpful, as it has allowed me to have space to “mess up”, get feedback and get more practice is a safe space.

People Manager06

Learnings

Designing an AI Product

Design flexible systems

Design for unpredictability

Test relentlessly

You create templates, guardrails, and adaptable flows, not scripts, so the product feels intentional while adapting to unpredictable user inputs.

We built in fallback behaviors and system protections to maintain learner experience even when AI responses were unexpected.

We stress-tested edge cases and adversarial conversations to refine fallback behaviors and protect learner experience when AI responses were unpredictable.

Designing with AI

Experiment constantly

Stay current

Work with AI as a creative partner

I use AI across writing, research, visuals, prototyping, and testing to explore where GenAI excels and where it struggles.

I subscribe to newsletters from UX and education leaders who track AI advancements to continuously improve my approach.

AI helps me move faster, unstick design problems, and explore new ideas. I see AI as both collaborator and co-creator in my design process.

AI Team Collaboration

Create fast feedback loops

Embrace ambiguity and experimentation

Build rituals to stay aligned

We organized as a GenAI Tiger Team using a Build + Recon model. The Build team prototyped weekly; the Recon team gathered external feedback bi-weekly to refine priorities.

Traditional roles blurred, designers, PMs, and learning scientists collaborated on prompt design and prototyping. We defined “good enough” as “not obviously wrong” to ship quickly and keep momentum.

We held daily check-ins, weekly prioritization meetings, and cross-team collaboration sessions. During early development, we ran Tiger Feast, a weekly review of user feedback and test videos to guide iteration.